*Trigger Warning: This article contains sensitive content that references sexual abuse and child abuse.

“As far back as I can remember, I see cameras, adults touching me, giving me something to drink. I see lingerie in miniature sizes. My earliest memories are of being forced to pose for child pornography, of being sexually abused.

I grew up near a major interstate highway. My abuser would bring me to rest stops so truckers could rape me. He connected with them via CB radio, and would bring me in a van so the deed could be done right there along the highway, or pick up the trucker and drive them back to wherever I was waiting. I remember them, from when I was just six years old.

There were photos and “parties,” too. I was made to dress in lingerie and then brought to warehouses, where men would be gathered with cameras. Other kids were there, too. We were given alcohol or injected with drugs to make sure we stayed calm, hazy, compliant.” – survivor of sex trafficking and CSAM (read the full story here.)

United States federal law defines “child pornography,” otherwise known as child sexual abuse material (CSAM), as any visual depiction of sexually explicit conduct involving a minor (a person less than 18 years old).

On a podcast in 2020, the New York Times exposed the spread of millions of images and videos containing CSAM currently available on the internet. They revealed that from 2005-2020, reported files of sexual abuse content available on the web exploded by 15,000%, tripling between 2017 and 2019 alone.

In this podcast, it was also revealed that because both the FBI and Los Angeles Police Department have to prioritize CSAM reports for infants and toddlers, they could not effectively respond to reports for older children due to the massive amounts of cases.

That means these institutions of protection didn’t have enough bandwidth to protect 5-year-olds from sexual abuse. Let that sink in.

In a newly released report, the National Center for Missing and Exploited Children (NCMEC) revealed that their CyberTipline received 29.3 million reports of suspected child sexual exploitation in 2021, an increase of 35% from 2020 (21.6 million) and 16.9 million in 2019. Over 99% of the reports received by the tipline regarded incidents of CSAM in 2021.

There has undoubtedly been an exponential increase in CSAM available on the internet in recent years and this epidemic is on an upward trajectory.

So the question must be asked, what is possibly causing the increase in CSAM worldwide?

Barely Legal Porn Fuels Deviant Appetites for Child Sexual Abuse Material

Picture this: There is a red-headed girl in knee-high socks and a short skirt, apparently about 10 years of age, playing on an empty playground. Her hair is in pigtails with bows. As she slides down the slide, a man approaches. The camera closes in on the exit of the slide as she spreads her legs and begins to pleasure herself. This is a real porn scene on a legal porn site. Granted, the girl in this scene wasn’t actually 10, but she has intentionally been made to look that way.

In recent years, the “barely legal” or “teen” genre of porn has exploded. Scenes, much like the one described above, feature girls dressed in kid’s clothing. The setting is often a child’s bedroom, a doctor’s office, or a playground. In comes a man of power—a father, stepfather, doctor, or some other parental figure who often compliments the girl and the two begin engaging in sexual acts.

While CSAM is illegal, barely legal porn is widely accepted and simulates the exact same scenarios. Girls are made to look as young as possible, and in fact, the younger the better. Scenes often feature lollipops, pigtails, stuffed animals, bows in girls’ hair and knee socks. With the growing popularity, there is an entire group of boys and men being groomed to find young girls (and boys) sexually attractive. Thus the jump from simulated child porn and actual CSAM is not that far.

RELATED: Barely Legal: Teen Porn Promotes Child Sexual Abuse

In Raised on Porn, director and Exodus Cry founder, Benjamin Nolot, interviewed a man named Jacob whose childhood exposure to porn at the age of 11 caused a fierce addiction that eventually led to him consuming illegal pornographic content.

“After a while, the stuff that worked before didn’t work as well… you build up a tolerance. So, it would get more edgier and edgier. Stuff that I wouldn’t imagine ever looking at. Then I found a file sharing program which I was using to download music and stuff like that, and there, by some accident, I found illegal pornography. Child porn.

“I sent Pornhub begging emails. I pleaded with them. I wrote, ‘Please, I’m a minor, this was assault, please take it down.’” But she received no reply.

It was so easy to get. It was as easy as getting the regular stuff. I guess my curiosity got to me and I couldn’t believe it could be this easy, so, I downloaded it. And I saw it, and I got the rush. It worked. It worked like nothing else did anymore.”

Jacob was arrested and spent nearly five years in prison because of his addiction to illegal pornography.

Porn fuels deviant appetites among frequent consumers and it’s well-known that the preferences of frequent viewers of porn often escalate. What had previously disinterested or even disgusted a porn user, now becomes necessary viewing to get the same dopamine hit.

In glorifying teen porn, society is putting the perverse sexual satisfaction of adults over the safety of children. We cannot expect to groom millions of consumers to be sexually attracted to kids and not expect it to flow out of the realm of simulation and into the very real abuse of children.

In an assessment of tipline reports, the Canadian Centre for Child Protection found that 78% of images and videos depict children under 12 years old.

According to Thorn, “if there’s an upload button on a platform, it will be used to host child sexual abuse material. This occurs regardless of the size of the platform, or its intended use.”

Rampant CSAM on Pornhub Exposed

14-year-old Rose Kalemba was raped by two men over a period of 12 hours while a third man filmed parts of the attack. She begged for her life, promising not to reveal their identities. Rose was later dumped in the street, left badly beaten with a stab wound on her leg and her clothes covered in blood.

When she was released from the hospital the next day, Rose attempted suicide and was stopped by her brother. A few months later, Rose was tagged in a link on MySpace which led to a page on Pornhub that featured videos of her attack – one had over 400,000 views at the time.

“I sent Pornhub begging emails. I pleaded with them. I wrote, ‘Please, I’m a minor, this was assault, please take it down.’” But she received no reply.

A 15-year-old girl who had been missing for a year was finally found after her mother was tipped off that her daughter was being featured in videos on Pornhub — 58 such videos of her rape and sexual abuse were discovered on the site.

These two tragedies were instrumental in sparking the Traffickinghub campaign, which Exodus Cry helped to advance globally. It resulted in Visa, Mastercard, and Discover halting all payment processing to the site and 10 million videos (80% of their content) being removed from Pornhub overnight. This content was removed because Porhub had failed to verify the age or consent of people in it.

The Traffickinghub campaign helped bring unprecedented accountability to porn tube sites and resulted in several major industry-wide changes. Mastercard now requires robust age and consent verification for all who are featured in porn on all sites that use their payment processing. Major porn site xHamster removed their download button and deleted huge numbers of unverified content from their site.

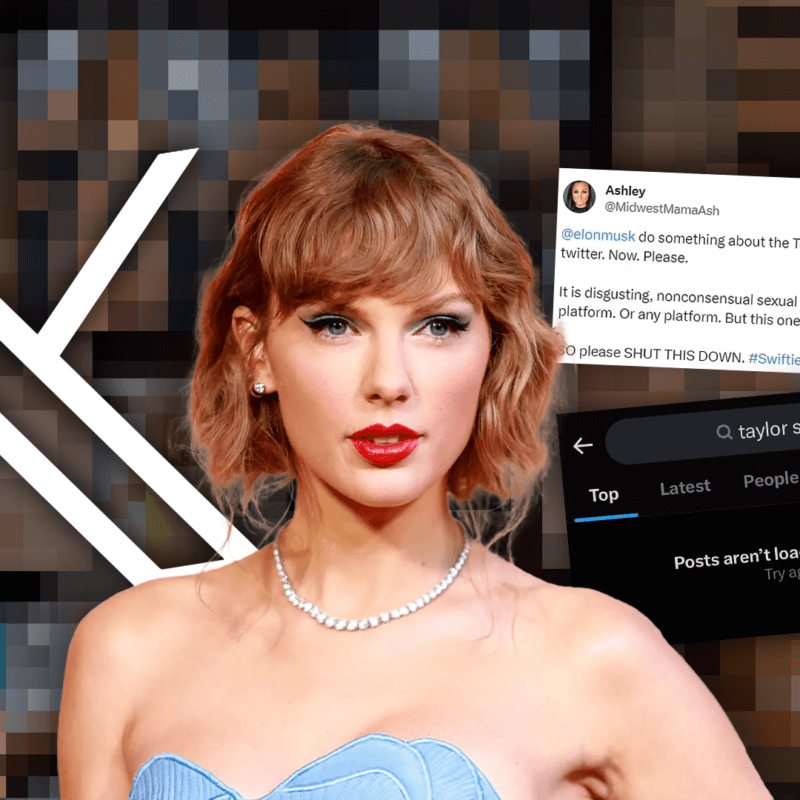

But it’s not just porn sites—many victims find videos and photos of their abuse on Twitter.

My earliest memories are of being forced to pose for child pornography, of being sexually abused.

One lawsuit brought by the National Center on Sexual Exploitation (NCOSE) detailed the experience of 2 victims whose abuse was circulated on Twitter. The 13-year-old boys were deceived and blackmailed by a man pretending to be a 16-year-old girl who had sent the boys pictures of “herself” with promises of more if they sent nude photos as well. Three years later, the boys were notified by their classmates that photos they had sent (CSAM) were circulating on Twitter.

One of the boy’s parents contacted Twitter and even after they had confirmed that he was a minor, Twitter responded that they “did not find anything against their community guidelines” in the tweet. The post was kept up with more than 160,000 views.

It wasn’t until a member of the Department of Homeland Security stepped in that the tweet was removed.

RELATED: Twitter Welcomes Porn and Children

A Glimmer of Hope for Victims and Survivors

Twitter, Pornhub, and other sharing sites care about profit, not the abuse and exploitation of children.

With an increase in porn use, there is an increase in demand for content featuring children and that means, if unchecked, the prevalence of CSAM will continue to rise at crisis levels.

There is some positive momentum though. According to a NCMEC report, 230 companies across the globe are now deploying tools that detect CSAM—a 21% increase since 2020. It’s also important to note that an increase in reports can be a good thing. Behind every CSAM file is a child victim who needs help, and just because cases are not being reported does not mean they don’t exist.

However, when victims of CSAM seek justice in the courts, section 230 of the Communication Decency Act, a law that protects digital platforms from liability for third-party content, often blocks their lawsuits.

One proposed bill, the EARN IT Act (Eliminating Abusive and Rampant Neglect of Interactive Technologies), would fix this problem by removing protections for companies under CSAM laws out of section 230. If victims sued under those CSAM laws, section 230 would no longer affect their lawsuit.

In other words, online companies would have to obey existing CSAM laws without any special protection from section 230, granting better opportunities for justice for victims.

While legislation to end protection for Big Tech is important, we cannot ignore the major source of the problem—Big Porn. The porn industry openly glorifies the sexualization of children and grooms consumers to have deviant appetites towards kids. This increasingly popular genre is a threat to the safety of children everywhere.

In a few months, we will be releasing a film that exposes the underbelly of the porn industry and takes a deep dive into barely legal porn. Stay tuned and follow us on Instagram to get more information as the release date approaches.

With your help, we can turn the tide against the deluge of child sexual abuse facing our generation.

1. Learn more about the EARN IT Act and how you can support this legislation.

2. Subscribe to our Magic Lantern Pictures YouTube channel to stay up to date on our latest film releases.

3. Give here to fund the fight against the exploitation of women and children.