Recently, a popular Twitch streamer was discovered to have been watching porn that appeared to feature fellow female streamers from the Twitch video game streaming community. While being found out for secretly watching your friends in porn is problematic for numerous reasons, the issue people took with the revelation was that the porn was “deepfake porn” and the female streamers did not consent to having it produced. In fact, they didn’t even know it existed.

If you’re unfamiliar with deepfake porn, it’s a type of AI-generated porn. The AI technology is used to create pornography by transposing the images of ordinary individuals or celebrities onto existing pornographic media. In essence, it means that any random person’s face can be used as a part of a graphic porn scene – without that person ever knowing.

One female Twitch streamer who discovered deepfake porn had been created of her said, “I was wishing for eye bleach. I saw myself in positions I would never agree to, doing things I would never want to do.”

Atrioc, the male streamer who was found watching the deepfake porn, took to Twitch, the popular streaming site, to livestream a very emotional apology. In the video, he tearfully admitted that he had paid for the deepfakes after seeing an ad on Pornhub while his wife was out of town. “I just clicked a f*cking link at 2 am, and the morals didn’t catch up to me.”

In a surprising twist, the original creator of the deepfakes removed all the content from the page he posted them to after seeing the reactions from the streamers. He posted a public apology, stating, “While this stuff is not illegal, it is still immoral… I feel like the total piece of sh*t I am.”

The Deepfake Phenomenon

Deepfakes are AI-generated pictures and videos that transpose someone’s face onto an existing piece of media, creating content meant to trick viewers. The “deepfake” technology has been around for a while, but due to recent advancements in technology and quality, it has become much harder to detect.

There are numerous examples of deepfakes available online featuring politicians and celebrities, often making false or outrageous statements, and spreading disinformation on social media.

RELATED: Debunking the “Porn is Harmless” Myth

For some, this technology provides an amusing piece of entertainment. One of the most well-known deepfake examples is a video of a man named Miles Fisher impersonating Tom Cruise, while using deepfake technology to transform his face. The video is so realistic that it is nearly impossible to tell it’s not real.

Unfortunately, the creation of deepfake porn is by far the most popular use for the tech. According to Sensity.ai, 90% to 95% of deepfakes are non-consensual pornography and 100% of deepfake porn victims are women.

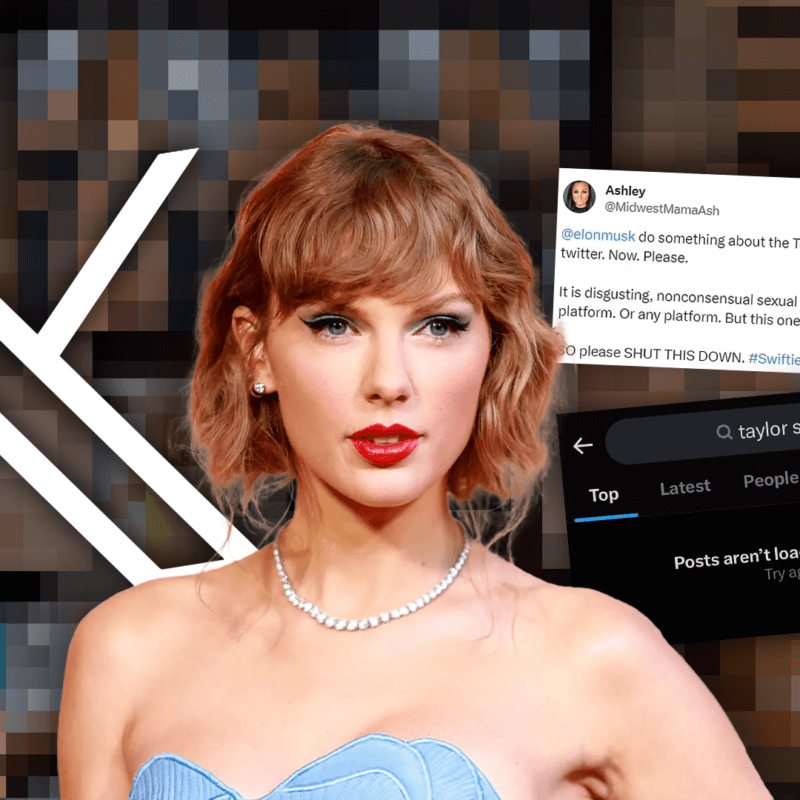

Celebrities like Kristen Bell, Scarlett Johansson, Taylor Swift, and Emma Watson have been the victims of deepfake porn videos in the past, with some of the videos being labeled as “leaked” in order to further trick viewers. It’s the inability to distinguish deepfake porn from real videos that adds to the distress experienced by victims.

But it’s not just celebrities – some perpetrators of deepfake porn are charging a mere $20 to create fabricated videos of whoever the buyer wishes – including exes, co-workers, children, you name it.

This creates serious concerns about the safety of women, and most importantly children, in this context. It’s absolutely imperative that new laws are quickly introduced to hold content creators accountable and specifically address the rampant deepfake issue.

The Victims of Deepfake Porn

The reality is, deepfake porn can now realistically be made of just about anyone with an online presence, and this opens people up to the very real possibility of their image being exploited online. As stated earlier, almost all deepfake porn is created with women’s faces, non-consensually.

One of the women featured in the Twitch deepfake videos, QTCinderella, shared a tearful video, saying, “This is what pain looks like… F*ck the constant exploitation and objectification of women. It’s exhausting.”

A woman recounting her experience of discovering deepfake porn of herself, shared her story with Australian Women’s Health:

“That night, I went on to the porn site in question and scrolled through countless pictures of ‘me’ being abused and humiliated. Someone had taken holiday photos from my Facebook and Insta and uploaded them to a porn site…they said I was a ‘blonde slut’ and they fantasised about seeing me ‘used hard’ by other men. Nothing can describe the shock of seeing yourself be violated. My smiling face – lifted from treasured photos, when I’d been carefree and happy – had been edited on to images of gang rape and strangulation.”

ChatGPT and Artificial Intelligence

While the development of new AI technology comes with exciting prospects, like ChatGPT which has been blowing up the internet in the three months since it was first launched, there are a slew of new risks that are also created.

Last month, a generative AI app called Lensa, came under fire for allowing its system to create fully nude and hyper-sexualised images from users’ headshots, despite its “no nudes” policy. The system also failed to protect against the production of child sexual abuse material (commonly called “child porn”) via the Magic Avatars program.

RELATED: How Porn and Trafficking are Undeniably Connected

When confronted on the abusive and exploitative capabilities of the program, Lensa placed the blame on users, stating it is “the result of intentional misconduct on the app.”

We unfortunately live in a porn-consumed culture, and one which often presents women and children as objects to be consumed sexually, whether they consent to it or not.

If gone unchecked, the development of this powerful technology will only continue to be used to exploit, harm, objectify, and degrade women and children. So how do we combat this growing threat?

How do we stop the creation of deepfake porn?

Currently, there is no federal law against deepfake porn. While there is a federal image-based sexual abuse or “revenge porn” law that allows victims of non-consensual porn to file lawsuits against perpetrators, the law doesn’t address deepfakes specifically. And incredibly, there are only two states that have banned deepfake content – California and Virginia. But Atrioc was able to gain access to the content despite residing in California so, clearly, those laws aren’t effective in protecting victims of deepfake porn.

This is why we are advocating for Congress to pass the Preventing Rampant Online Technological and Criminal Trafficking Act of 2022 (PROTECT Act). The PROTECT Act fills a gaping hole in existing law that allows pornography to be created, uploaded, and distributed online without the consent of persons depicted in the material. This has led to the victimization of countless women and men who have few or no remedies under present law.

The PROTECT Act would require websites allowing sexually explicit material to obtain verified consent forms from individuals uploading content, or appearing in uploaded content, and to require websites to remove images uploaded without consent.

Click below to sign on in support!