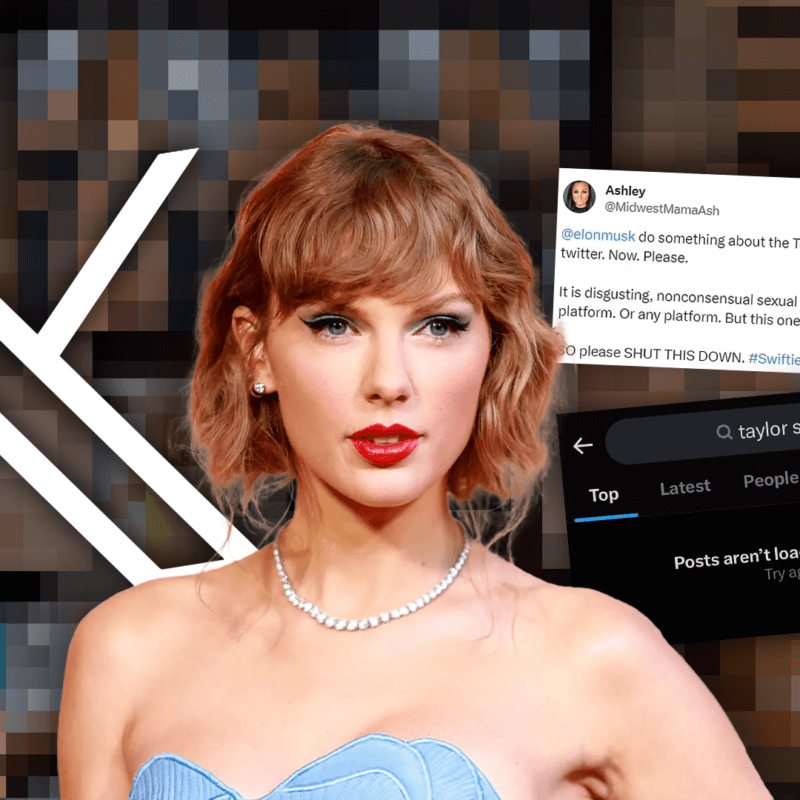

Recently, the media has been buzzing with the news of Tesla CEO and quirky billionaire, Elon Musk, buying Twitter for a whopping $44 billion. The media was buzzing about it earlier this year but it appeared the deal would fall through after Musk received unsatisfactory information regarding the number of bot accounts on the platform. However, on October 4, news broke that he was dropping the legal cases and proceeding with the purchase.

With the acquisition of Twitter, Musk has much more to worry about than bots.

Recently, big brands have been pulling their advertisements from the platform after they were found alongside tweets featuring child sexual abuse material, also known as “child porn.” A study by Reuters found that more than 30 advertisers appeared on the profile pages of Twitter accounts peddling links to this illegal exploitative content. For example, a promoted tweet for Cole Haan appeared next to a tweet in which a user said they were “trading teen/child” content.

Musk responded to the article on Twitter, stating it was “extremely concerning.” However, the question remains, will he take the steps to make substantial change?

Twitter is a Hub for CSAM

Twitter is a cesspool of predatory accounts that exist purely to display and trade CSAM, and has essentially become a marketplace for pedophiles. A 2015 investigation into the extent of pornography on Twitter revealed that “as many as 500,000 sexual images are posted daily, including images of hardcore and extreme sexual practices.”

We sent a researcher to Twitter to examine what kinds of porn are being featured on the platform. Within minutes he stumbled upon a massive library of pornographic images and videos of children, known as child sexual abuse material (CSAM) or “child porn.”

His search revealed countless examples of teens selling nudes and leaked screen recordings of private videos from other apps, such as an underage couple performing oral sex in a car, a young girl masturbating in a bathroom, and young teens having sex in a car.

He even found countless advertisements for batches of illegal content being sold openly on Twitter. One account in particular was advertising for “under 18 gays” to join a groupchat. Another required a $10 entry fee via Cashapp, Paypal or Venmo for a trading group chat on Telegram. This same account offers a full deck of content (more than 200K videos) for $150. Buyers can even specify the race and age of victims in the purchased content as if they were custom ordering an art piece.

We quickly reached out to law enforcement and passed on all of our findings.

Twitter Knowingly Facilitates Child Exploitation

Twitter has been regarded as “the most important social media platform for the porn world.” The social media site claims to have a “no-tolerance policy” when it comes to CSAM, however, on any given day, there are countless CSAM images tweeted, traded, exchanged, bought and sold on the platform.

According to the National Center on Sexual Exploitation (NCOSE), Twitter knowingly distributed videos containing the abuse of a minor and refused to remove them. NCOSE filed a federal lawsuit on behalf of the minor in January 2021.

“At age 16, Plaintiff John Doe was horrified to find out sexually graphic videos of himself—made at age 13 under duress by sex traffickers—had been posted to Twitter. Both John Doe and his mother, Jane Doe, contacted the authorities and Twitter. Using Twitter’s reporting system, which according to its policies is designed to catch and stop illegal material like child sexual abuse material (CSAM) from being distributed, the Doe family verified that John Doe was a minor and the videos needed to be taken down immediately. Instead of the videos being removed, Twitter did nothing, even reporting back to John Doe that the video in question did not in fact violate any of their policies.”

One video of this trafficked boy received thousands of retweets and at least 167,000 views. Twitter refused to remove the video until a federal agent from the Department of Homeland Security (DHS) intervened. This is abhorrent.

In response, Twitter asked the judge to dismiss the case on the grounds that, under Section 230, they cannot be held liable for refusing to remove the CSAM or for any injury to those involved.

By law, all CSAM is considered illegal contraband and the mere possession of it—known or unknown—results in serious jail time for the average person. But tech giants like Facebook and Twitter generally enjoy immunity under Section 230 of the Communications Decency Act, which grants them protection from being responsible for any of the content uploaded to their platforms.

However, partly thanks to Traffickinghub—a global movement advanced by Exodus Cry to hold Pornhub accountable for distributing nonconsensual porn—there is mounting scrutiny over child sexual abuse material across several platforms.

Twitter Refuses to Police Their Platform

But, as we mentioned above, Twitter is not merely hosting CSAM, it is acting as an exchange platform for illegal content and is, in some cases, grooming teens, teaching them to trade, buy, and sell CSAM together.

However, it’s not like Twitter is incapable of policing their platform. One need only look at the plethora of high profile accounts Twitter has banned over the past few years, many for questionable reasons.

While the controversial platform is quick to remove accounts which do not align with their political ideology, they are quite slow and even reluctant to remove content that has been proven to contain minors and non-consensual sex acts.

This graphic sexual content is allowed in Twitter’s guidelines and they put the onus of regulation onto the user. Users can “share graphic violence and consensually produced adult content within [their] Tweets, provided that [they] mark this media as sensitive.”

These images and videos include those that depict rape themes, adult-with-teen, incest, gang bang, and BDSM. There are countless accounts advertising webcams, porn sites, pay per view accounts, and in-person encounters.

And as if this wasn’t bad enough already, the social media platform touts a minimum age requirement of just 13 years old.

However, Elon Musk has the power to make substantial changes. Under Jack Dorsey and Parag Agrawal, rape, incest, sexual abuse, and illegal sexual content have been allowed to thrive unincumbered. Will Elon Musk follow suit? Or will he step up to the plate and ban this horrific content from Twitter in order to make it a place that refuses to profit off the exploitation of children?

Elon Musk Must Rid Twitter of CSAM

By virtue of hosting and distributing porn, Twitter is an “adult” site. They cannot enable hardcore porn and yet, at the same time, market accounts to 13-year-olds. Twitter must choose: are they a porn site or are they a social media site for kids, teens, and adults alike?

If a site wants to host porn, it’s imperative that it verify the age and consent of those depicted and all content should be inaccessible to anyone under 18. These are incredibly basic steps that make a substantial difference in protecting children from brain-altering graphic sexual content.

Musk and Twitter could make a remarkable difference and promote a significant step forward in the fight against sexual exploitation, and the public health harms of pornography, if they choose to do so.

Sign Exodus Cry’s petition calling for robust age verification with government ID on all sites hosting pornographic content.

Read NCOSE’s statement on Twitter here and sign their petition calling for Elon Musk to end sexual abuse and exploitation on Twitter.